Running Terraform across AWS and Azure at the same time can be challenging. But, a solid structure helps you scale without duplication or chaos.

Here’s how I do it:

-

Be very specific

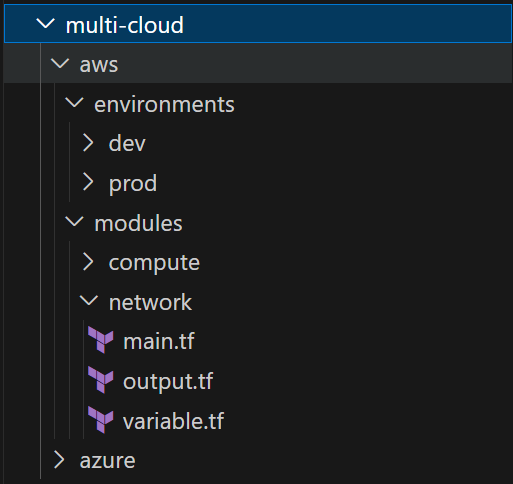

- Create a “multi-cloud” folder.

- Under “multi-cloud”, create AWS and Azure into their own folders.

- Each has its own main.tf, variables.tf, and outputs.tf to keep cloud logic clean and separate.

-

Use modules

- Create a “modules” folder.

- Inside “modules”, define shared building blocks (e.g., AWS storage, Azure compute).

- Call them from the main configs. Write once, reuse many times.

-

Use Environment configs

- Create “envs” folder, and add settings for dev, test, and prod.

- Each environment has its own backend, variables, and provider files.

- This lets you deploy the same code to different stages with different settings.

-

Use Validation blocks

- Use validation rules in variables.tf to enforce input contracts.

- This helps catch errors early before they hit production.

-

Secure remote state

- For AWS, store state in S3 with DynamoDB for locking (this is optional).

- For Azure, use blob storage with state locks.

- Securing state prevents conflicts and data loss.

-

Define Multi-region and multi-account support

- Define providers with aliases to handle multiple regions or accounts.

- This is essential for scaling across enterprise setups.

-

Do not forget CI/CD best practices

- Add “tflint” and “checkov” for static analysis and policy checks.

- Separate “plan” and “apply” steps in your pipelines, with approvals, to reduce blast radius.

Why it’s useful:

This approach avoids duplication, isolates environments, and supports multiple clouds. It gives teams a safe, scalable Terraform foundation.

What else would you recommend?

Leave a Reply